The integration of Artificial Intelligence presents a duality of profound opportunity and significant, high-stakes risk. For senior leadership, the challenge is not merely activating AI, but strategically embedding it within the corporate structure to ensure security and compliance. The three lines of defense model offers a proven framework for this challenge, providing clear accountability for risk ownership, oversight, and independent assurance.

Establishing a Strategic Foundation for AI Governance

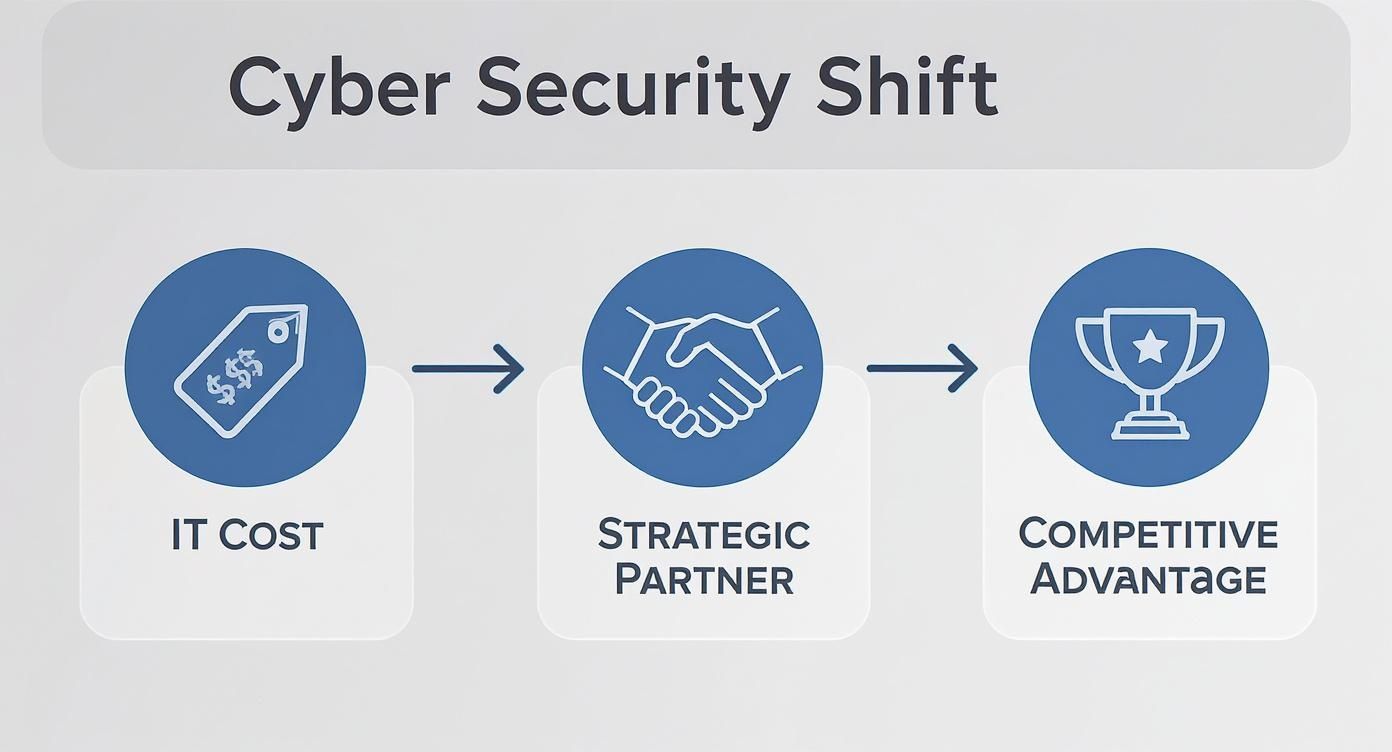

For results-oriented leaders in Germany, the adoption of any new framework must deliver demonstrable business value. The integration of AI is not an incremental technology upgrade; it is a fundamental operational shift that introduces novel risks across operations, compliance, and corporate reputation.

Therefore, a structured governance approach is not an impediment to innovation. It is the essential scaffolding required to scale AI capabilities securely and sustainably.

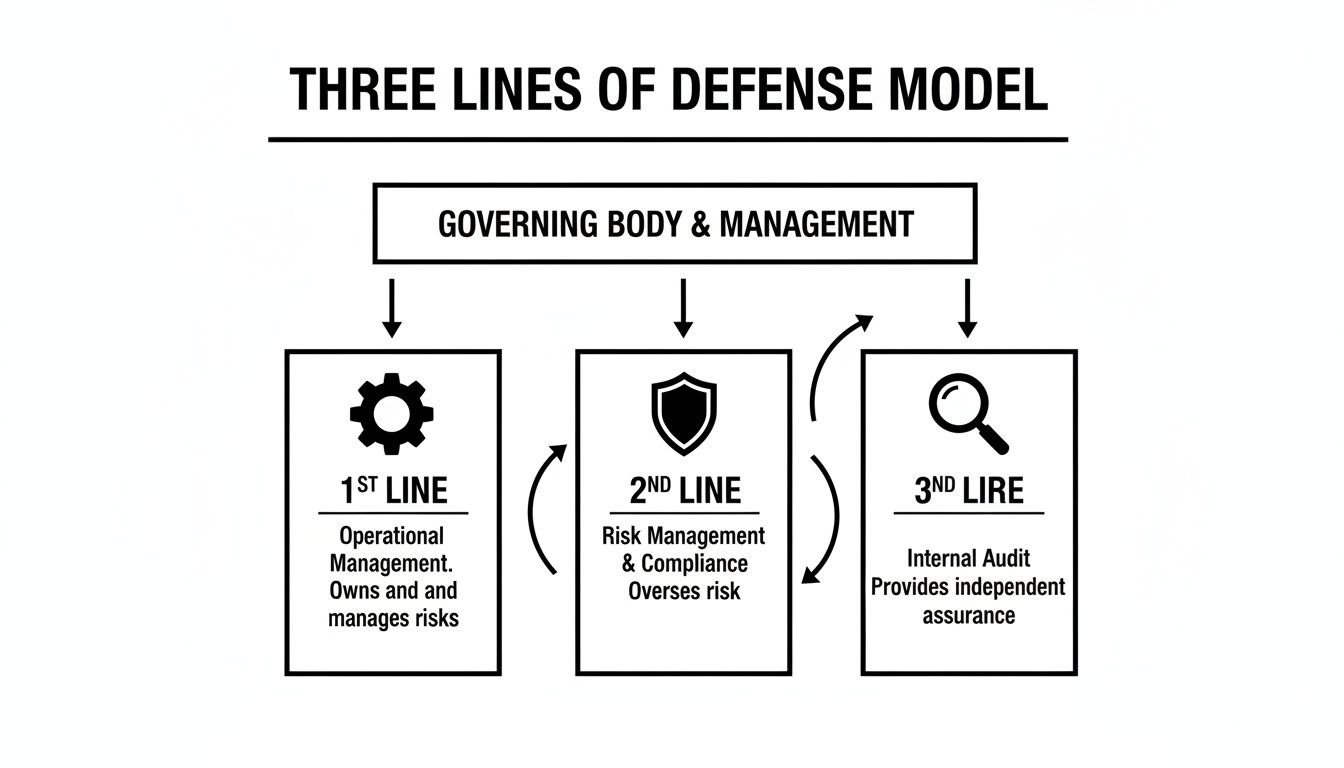

The Three Lines of Defense model provides this structure. It translates the abstract concept of "risk management and compliance" into an operational blueprint that delineates responsibilities. This is not about creating bureaucracy; it is about architecting a system of checks and balances that empowers teams to innovate with confidence, operating within defined, secure parameters.

This diagram from the Institute of Internal Auditors illustrates the updated model's core principles and structure.

Ready to Build Your AI Project?

Let's discuss how we can help you ship your AI project in weeks instead of months.

This visualization effectively highlights the necessary collaboration and communication between the lines. It depicts a fluid system, not a rigid hierarchy, overseen by the Governing Body with value creation as the ultimate objective.

Core Principles of the Model

The efficacy of this model in managing the complexities of AI is rooted in several core principles:

- Clarified Roles and Responsibilities: It explicitly assigns accountability for day-to-day risk management (First Line), specialist oversight (Second Line), and independent assurance (Third Line).

- Enhanced Accountability: Clear ownership eliminates ambiguity. Risk management becomes an active, shared responsibility across the enterprise, rather than the isolated concern of a single department.

- Improved Communication: The framework establishes formal channels for communication among operational teams, risk functions, and senior leadership, ensuring critical information is escalated efficiently.

Here is a summary of how this structure applies specifically to AI:

The Three Lines of Defense for AI at a Glance

| Line of Defense | Core Function | Primary Responsibility |

|---|---|---|

| First Line | Owns and Manages Risk | AI development and operations teams building and deploying models responsibly. |

| Second Line | Oversees Risk | Risk, compliance, and legal functions setting AI policies and monitoring adherence. |

| Third Line | Provides Independent Assurance | Internal audit testing the effectiveness of AI controls and governance processes. |

This structure ensures that powerful AI is not just built, but built correctly.

Integrating Compliance Artificial Intelligence can further enhance this framework, augmenting both efficiency and risk management capabilities. Ultimately, applying the Three Lines model transforms risk management from a reactive necessity into a strategic enabler of confident, forward-moving innovation.

Deconstructing Each Line of Defense

Effective implementation of the Three Lines of Defense model requires a precise understanding of each component. This is not about creating bureaucratic silos, but engineering a collaborative system of checks and balances designed for clarity and efficiency. For leaders in Germany's engineering-centric economy, an appropriate analogy is the construction of a high-performance automobile.

Each line has a distinct function, yet all are interconnected. A clear mental model of these roles facilitates their synergistic operation to form a robust governance structure.

This diagram illustrates the specific functions of each line within the model.

As depicted, there is a clear flow of responsibility, from direct, operational ownership to fully independent assurance—a design for comprehensive oversight.

The First Line: Operational Management

The first line comprises the operational teams—the engineers and assembly crews on the factory floor. In the context of AI, these are the data scientists, developers, and business unit leaders actively building and deploying AI solutions.

Their primary function is to own and manage risk at its source. This team is accountable for ensuring AI models are built correctly, data integrity is maintained, and initial quality controls are met. They are responsible for the day-to-day performance and risk management of the systems they create.

Just as an engineering team is responsible for the integrity of every component in a vehicle, your first line is responsible for the integrity of every AI application deployed. This is where risk management begins.

Within this context, it is valuable to recognize how proactive tools like integrity assessments are your first line of defense against human-factor risks, which are often the most unpredictable.

The Second Line: Risk and Compliance

Continuing the automotive analogy, the second line functions as the quality assurance and safety testing department. This group does not build the vehicle but sets the standards, monitors processes, and ensures the final product meets all regulatory and internal requirements.

Want to Accelerate Your Innovation?

Our team of experts can help you turn ideas into production-ready solutions.

This line includes functions such as Risk Management, Compliance, Legal, and Information Security. Their role is to provide independent oversight and expertise to the first line. They develop the policies, frameworks, and controls that guide AI development and challenge the first line's risk management activities to validate their effectiveness.

Key functions of the second line include:

- Policy Development: Creating enterprise-wide AI usage policies and ethical guidelines.

- Control Monitoring: Continuously assessing the effectiveness of risk controls implemented by the first line.

- Expertise Provision: Offering specialized guidance on complex areas like data privacy, model bias, and evolving regulations.

This oversight ensures that innovation does not compromise safety or compliance.

The Third Line: Internal Audit

The third line of defense provides the final layer of assurance. This is the independent crash-test and performance validation team. Typically embodied by Internal Audit, its function is to provide objective and independent assurance directly to the board and senior management.

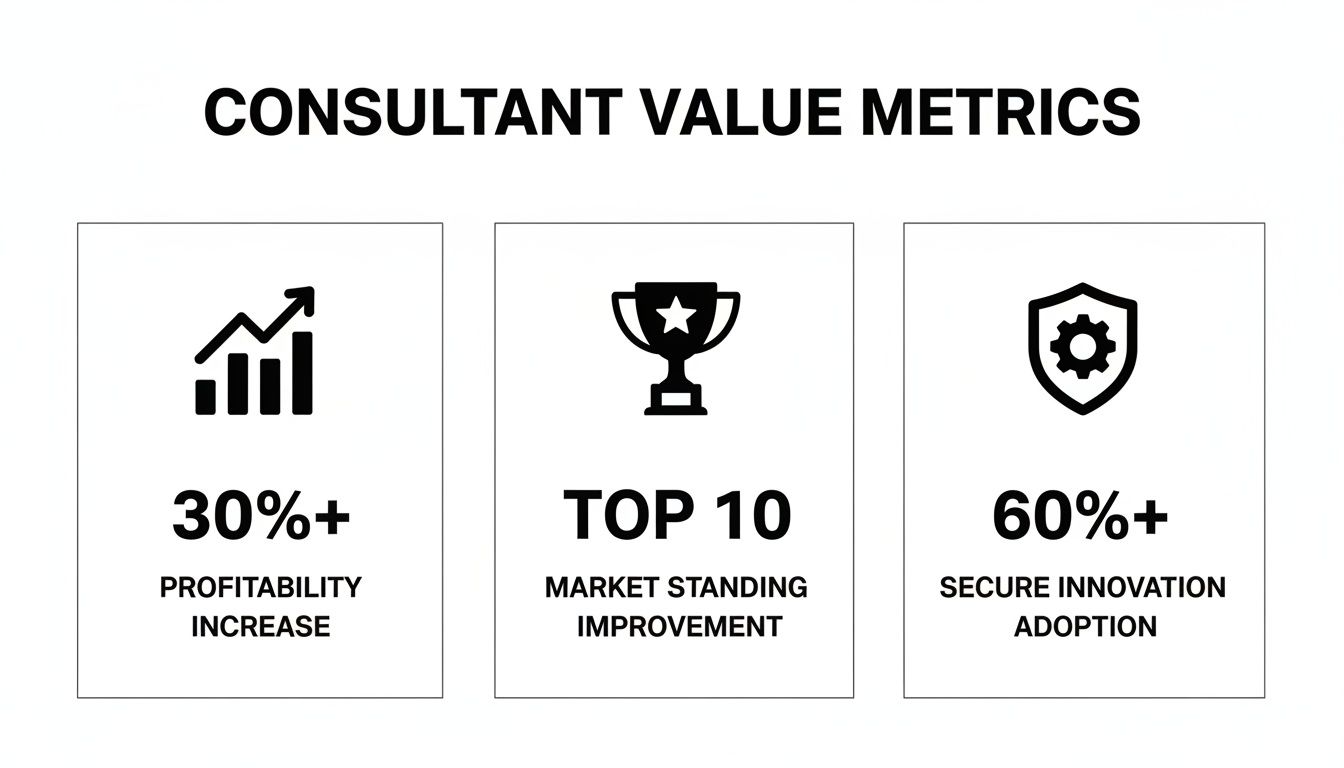

They audit the entire governance system, evaluating the performance of both the first and second lines. Their organizational separation from day-to-day operations and oversight enables a truly unbiased assessment of the enterprise's overall risk and control environment. The model's value is well-established; a global survey found that 75% of organizations, including many in Germany, follow this framework. After its adoption, some DAX firms reported a 15% reduction in compliance costs through the elimination of duplicated efforts.

Applying the Model to Enterprise AI Programs

Adapting a classic risk management framework to the dynamic domain of Artificial Intelligence requires more than direct translation; it demands thoughtful application. While the core principles of the three lines of defense remain valid, the specific responsibilities must be meticulously mapped to the unique characteristics and risks of the AI lifecycle. This is the only method to achieve effective oversight at every stage, from initial concept to a model in full production.

This is not a theoretical exercise but a strategic imperative for any organization serious about scaling AI without incurring significant operational or reputational damage. Without defined roles, accountability becomes diffuse, risks go unaddressed, and the transformative potential of AI is undermined.

The First Line in AI Operations

The first line consists of the teams directly building, deploying, and managing AI systems on a day-to-day basis. They are the initial owners of any emergent risks.

For an AI program, the first line typically includes:

Looking for AI Expertise?

Get in touch to explore how AI can transform your business.

- AI Engineering and Data Science Teams: The builders, responsible for model development, data validation, accuracy checks, and implementing secure coding practices from inception.

- Business Unit Leaders: The sponsors of AI initiatives to achieve specific business objectives. They are ultimately accountable for the outcomes—and associated risks—of these tools within their domains.

- IT Operations and MLOps Teams: The maintainers, responsible for infrastructure management, deployment pipelines, and continuous monitoring of AI model performance in production.

Their primary mandate is to build and operate AI systems within the boundaries established by risk and compliance, handling initial quality assurance from data integrity to bias detection. They are the foundation of AI governance.

The Second Line for AI Oversight

While the first line executes, the second line establishes the rules of engagement and provides expert oversight. This group creates the governance framework that enables the first line to operate with speed and safety. They are intentionally removed from the daily development cycle but remain deeply integrated into the AI lifecycle.

This oversight is non-negotiable. Absent a strong second line, the first line will invariably prioritize velocity over security, potentially exposing the firm to significant regulatory or ethical liabilities. We explore this further in our guide to revising enterprise AI security for 2025.

Functions within the second line for AI typically include:

- AI Security Teams: These teams perform adversarial testing, probe models for vulnerabilities, and assess risks such as data poisoning.

- Legal and Compliance Departments: They author the AI rulebook, conduct ethical reviews, and ensure compliance with regulations like GDPR and the forthcoming EU AI Act.

- Data Governance Functions: These teams set policies for data provenance, quality, and privacy—all critical components for building trustworthy AI.

This line exists to challenge the first line's assumptions, monitor for issues like model drift and bias, and report on the enterprise's aggregate AI risk posture to senior leadership. They are the policy guardians and expert risk advisors.

The Third Line for Independent AI Assurance

The third line provides the ultimate level of assurance through an objective, independent review of the entire AI governance framework. This role is almost exclusively performed by Internal Audit, which operates with complete autonomy from the first two lines and reports its findings directly to the board or its audit committee.

The third line's function is to answer one critical question for the board: "Is our AI risk management framework operating as designed and effectively mitigating risk?" Their independent validation provides the confidence required to make significant strategic investments.

Their mission is not to duplicate the work of the other lines but to test their effectiveness. An internal audit of an AI program would not involve building a model or writing policy. Instead, it would:

- Assess whether the first line consistently adheres to established AI development and security protocols.

- Evaluate if the second line’s policies are robust and if their monitoring activities are effective at identifying real issues.

- Verify that the overall AI governance structure is sufficient to manage new and emerging AI-related risks.

By systematically applying the three lines of defense to AI programs, an organization can build a governance system that is structured, accountable, and resilient. This framework transforms risk management from a reactive, compliance-driven exercise into a proactive, strategic advantage that fuels safe and confident innovation.

The Strategic Business Benefits of AI Governance

For any executive focused on business outcomes, a new framework must deliver tangible value. Adopting the three lines of defense for AI governance is not an academic exercise; it is a strategic decision that creates a distinct competitive advantage and safeguards the organization's future.

Ready to Build Your AI Project?

Let's discuss how we can help you ship your AI project in weeks instead of months.

This structured approach reframes risk management from a reactive cost center to a proactive enabler of innovation. By establishing clear lines of accountability, organizations can pursue ambitious AI initiatives with greater confidence, knowing a robust safety net is in place. The benefits extend far beyond risk mitigation.

Enhanced Regulatory Preparedness

As regulatory scrutiny intensifies—particularly with GDPR and the impending EU AI Act—demonstrable due diligence is non-negotiable. The three lines of defense model creates a clear, auditable trail of all risk management activities.

This structure proves to regulators and auditors that your organization has a systematic process for identifying, managing, and overseeing AI risks. It proactively answers the critical question, "How do you ensure your AI systems are compliant and secure?" before an audit begins. This preparedness can significantly reduce exposure to compliance-related penalties and reputational damage.

Improved Operational Efficiency

Ambiguity is the adversary of efficiency. When responsibility for risk is undefined, teams either duplicate efforts or, more perilously, critical risks are overlooked as each party assumes another is accountable. This framework eliminates such chaos.

By explicitly assigning ownership (First Line), oversight (Second Line), and assurance (Third Line), the model streamlines workflows and clarifies responsibilities. This clear division of labor ensures resources are deployed effectively, prevents redundant activities, and allows teams to focus on their core functions. A firm grasp of these responsibilities is fundamental, a topic we address in our guide on system engineering and IT governance.

Strengthened Stakeholder Trust

Ultimately, trust is the currency of modern business. A transparent and robust governance model sends a powerful signal to the board, investors, partners, and customers. It demonstrates that your application of AI is not only innovative but also profoundly responsible.

The model's success is evident in the European financial sector, where the European Central Bank has long advocated its use, and Germany's regulator, BaFin, actively promotes it. This structure proved its resilience during the pandemic, helping German banks reduce non-performing loans from 2.1% in 2019 to 1.4% in 2021. As leading automotive and manufacturing firms scale their AI programs, adopting this battle-tested model is the most reliable path to building similar resilience. Further details can be found in an ECB speech from 2018.

Implementing the Three Lines of Defense for AI transforms risk management into a strategic asset. It signals to the market that your organization is a mature, reliable innovator, building the essential trust required for long-term growth and stability.

With the theory established, the critical question becomes: how do you translate the three lines of defense model from a concept into a functioning operational reality?

For senior leaders, the goal is not to create more bureaucracy but to implement a robust structure that enables—rather than stifles—safe AI innovation. The most effective approach is to begin with a high-impact pilot project, demonstrate value, and then scale the framework across the enterprise.

Want to Accelerate Your Innovation?

Our team of experts can help you turn ideas into production-ready solutions.

This roadmap is designed to build momentum by delivering value quickly and managing risk methodically, avoiding the pitfalls of a large-scale, top-down rollout.

Phase 1: Secure Executive Sponsorship and Form a Committee

First, secure unequivocal support from the C-suite. Without a clear mandate from senior leadership, any governance initiative will fail to gain traction against competing priorities. Executive sponsorship provides the necessary authority to align the organization.

Next, assemble a cross-functional AI Governance Committee. This body will serve as the central nervous system for AI governance, comprising representatives from all three lines and key business unit leaders who are active AI stakeholders.

Initial mandates for this committee include:

Looking for AI Expertise?

Get in touch to explore how AI can transform your business.

- Defining an AI Risk Appetite Statement: This document articulates the types and levels of risk the organization is willing to accept in pursuit of its AI objectives.

- Establishing a High-Level AI Usage Policy: This sets the fundamental principles for ethical and secure AI development and deployment across the business.

Phase 2: Develop Foundational Governance Artifacts

With the committee established, the next step is to build the operational tools. The most critical of these is the AI Risk Register. This is not a static document but a dynamic log that identifies, categorizes, and prioritizes all potential risks associated with AI projects.

The register must be specific, covering risks related to:

- Data privacy and security (e.g., GDPR compliance)

- Model accuracy, fairness, and bias

- Operational resilience and model explainability

- Risks associated with third-party AI vendors

Concurrently, the committee must draft an initial AI Governance Checklist. This practical tool translates high-level policy into concrete, actionable controls, providing first-line teams with a standardized set of requirements to verify before any new AI model is deployed.

This is not about impeding progress. Documenting risks and creating checklists establishes guardrails that enable teams to move faster and with greater confidence, knowing that critical compliance and security requirements have been addressed from the outset.

This structured approach is already proven in Germany's highly regulated industries. Financial regulators like BaFin have relied on the Three Lines of Defense for years. In the EU financial sector, where adoption stands at 88%, firms using this model report an 18% lower rate of data breaches. With the EU AI Act set to be enforced in 2026, operationalizing this framework is a critical step to future-proof the organization. Additional data on the model's impact is available from governance.ai.

Mapping AI Governance Roles and Responsibilities

To make the framework tangible, it is essential to map existing roles to their new responsibilities. This is not about wholesale reorganization but about clarifying accountability.

| Role or Department | Line of Defense | Key AI Governance Responsibility |

|---|---|---|

| AI Development Teams / Data Scientists | First | Building, testing, and deploying AI models in line with policies; documenting model behaviour and limitations. |

| Product & Business Unit Owners | First | Owning the AI use case, defining business objectives, and accepting residual risks. |

| Risk Management / Compliance | Second | Setting AI risk policies, creating control frameworks, and independently reviewing first-line activities. |

| Information Security (InfoSec) | Second | Assessing and mitigating cybersecurity risks in AI systems, including data poisoning and model theft. |

| Legal / Data Privacy Office | Second | Ensuring compliance with regulations like GDPR and the AI Act; advising on ethical considerations. |

| Internal Audit | Third | Providing independent, objective assurance that the AI governance framework is effective and operating as intended. |

| C-Suite / Executive Leadership | Oversight | Championing the governance framework, setting the risk appetite, and providing resources. |

This table clarifies how different business functions integrate into the framework, ensuring a shared understanding of roles in managing AI risk effectively.

Phase 3: Pilot and Refine with High-Impact Projects

Do not attempt a comprehensive rollout initially. Instead, select one or two high-impact AI projects to pilot the new governance framework. These pilots serve as a real-world testbed for refining checklists, processes, and committee-team interactions.

Treat this phase as a learning exercise. Is the checklist practical for an agile development team? Are risk reporting channels functioning smoothly? The feedback gathered here is invaluable for optimizing the framework before broader implementation.

The pilot phase accomplishes two critical objectives:

Ready to Build Your AI Project?

Let's discuss how we can help you ship your AI project in weeks instead of months.

- Demonstrates Value: A successful pilot provides a strong business case, showing how effective governance facilitates project success rather than hindering it.

- Refines the Process: It exposes friction points and gaps in the initial design, enabling practical adjustments based on operational realities.

Phase 4: Scale and Embed Across the Enterprise

Once the model has been proven and refined, it is time to scale. This involves formally embedding the three lines of defense roles and responsibilities into job descriptions, project management methodologies, and internal audit plans.

Scaling requires more than a memorandum; it necessitates continuous communication and training. This includes sessions to educate first-line teams on their risk ownership responsibilities and workshops to align second-line functions. For teams aiming for rapid execution within this structure, our 21-Day AI Delivery Framework offers a compatible approach for fast, de-risked implementation.

By following this phased roadmap, you can implement a robust AI governance framework that is both effective and practical, transforming risk management from an operational burden into a strategic advantage.

Building Resilient AI Innovation

The three lines of defense model is not merely a risk management framework; it is a strategic playbook for innovating with confidence and building organizational resilience. By establishing clear delineations for ownership, oversight, and assurance, companies can master the complexities of AI adoption without jeopardizing operations or reputation. This is not about creating bureaucracy; it is about constructing a robust foundation that enables smart, secure progress.

A structured approach embeds a culture of accountability across the enterprise. It systematically transforms the disruptive potential of AI into a well-governed engine for growth. When every team—from the engineers developing models to the auditors validating controls—understands their precise role, the entire system functions with greater strength and reliability.

The Blueprint for Responsible Leadership

For any leader driving ambitious AI initiatives, this model provides the necessary blueprint. It offers a proven methodology for building AI systems that are secure, compliant, and deliver measurable business value. Adopting this framework is a significant step toward future-proofing your organization.

By implementing the Three Lines of Defense, leadership moves beyond simply approving AI projects. It demonstrates a tangible commitment to responsible technological stewardship, building the trust with customers, regulators, and investors that is essential for long-term success.

Implementing this model is an offensive, not a defensive, strategy. It provides the structural integrity required to pursue major AI objectives without assuming undue financial, legal, or reputational risk.

The primary benefits are:

- Unambiguous Ownership: It eliminates confusion by assigning direct risk ownership to the teams building and operating AI systems.

- Independent Oversight: It ensures that expert functions like compliance and security have the authority to effectively challenge and guide frontline teams.

- Objective Assurance: It provides the board and senior executives with an impartial assessment that the governance system is operating as intended.

The ultimate objective is to create an environment where innovation and control are not conflicting forces, but two sides of the same coin working in concert to build a resilient enterprise. By adopting the three lines of defense, you are not just managing risk—you are building a durable competitive advantage through intelligent, powerful, and responsible technology leadership.

Frequently Asked Questions

Implementing a robust governance framework like the three lines of defense inevitably raises questions from leadership and operational teams. Addressing these clearly from the outset is critical for building enterprise-wide confidence and ensuring smooth execution.

Below are common inquiries from managers and executives.

Is This Model Too Bureaucratic For Fast-Moving AI Projects?

A common concern is that such a framework will introduce bureaucracy and impede innovation. However, when implemented correctly, the opposite is true: it provides clarity that accelerates progress. The objective is to establish guardrails, not roadblocks.

For agile AI projects, the key is to integrate the Second Line (Risk and Compliance) directly into development sprints. This "shift-left" approach ensures that security and compliance checks occur in real-time throughout the development process, not as a final gate that forces rework.

This transforms the model from a rigid process into a support system, empowering teams to innovate rapidly with the confidence that comes from clear accountability.

How Do We Staff These Lines If We Lack In-House AI Expertise?

Sourcing sufficient internal AI expertise to staff all three lines is a common challenge. A pragmatic solution is a hybrid approach: upskill internal talent where possible while engaging external partners to fill immediate capability gaps.

A hybrid staffing model might include:

- First Line: Provide targeted training to existing engineers and data scientists on secure coding for AI and current data privacy regulations.

- Second Line: Augment your internal risk team with external specialists who possess deep expertise in AI regulations.

- Third Line: Have your internal audit team partner with specialist third-party auditors who are proficient in assessing complex AI systems.

This strategy addresses critical knowledge gaps immediately while fostering long-term, in-house expertise.

What Is The Most Critical First Step For Implementation?

The single most critical first step is to establish a cross-functional AI Governance Committee with clear and visible sponsorship from the C-suite. Without executive mandate, any governance initiative will struggle to gain the necessary resources and authority.

This committee must include representatives from all three lines of defense as well as key business leaders. Its initial charter should be to achieve two foundational objectives:

Want to Accelerate Your Innovation?

Our team of experts can help you turn ideas into production-ready solutions.

- Craft a unified AI risk appetite statement. This document defines the organization's tolerance for AI-related risks.

- Develop a high-level policy for AI usage. This establishes the core principles guiding all AI development and deployment.

This foundational work aligns the entire organization, from the boardroom to the development teams. It provides the strategic direction necessary for the framework to be effectively adopted at every level, ensuring all stakeholders are working toward the same governance goals.

At Reruption GmbH, we act as "Co-Preneurs for the AI Era," helping you build strong governance that enables, rather than hinders, innovation. We partner with you to transform your AI strategy into secure, compliant, and production-ready systems. Discover how we can de-risk your AI initiatives by visiting us at https://www.reruption.com.